John Tolley, October 24, 2017

Researchers at Purdue University are reading people?s minds.

Consensually, of course.

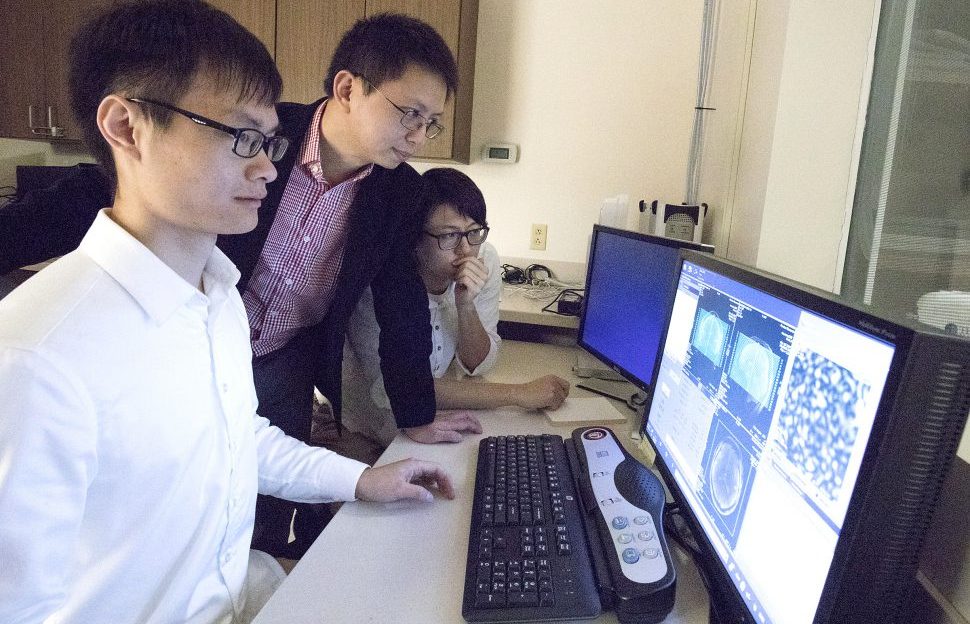

Using fMRI scanning technology, a group of biomedical and computer engineers working under Assistant Professor Zhongming Liu are exploring the brain activity of subjects as they are shown specific video clips containing people, animals, nature and actions.

When images appear, the areas of the brain that respond are tracked and analyzed using a deep-learning algorithm known as a Convolutional Neural Network. The team is hoping to gain deeper insights than ever before into the functioning of the brain that could have far-reaching implications on the development of complex artificial intelligence.

Used to aid computers and other devices in facial and object recognition, the Purdue study is the first attempt at using a Convolutional Neural Network to map the areas of the brain tied to understanding visual signals.

?That type of network has made an enormous impact in the field of computer vision in recent years,? said Liu, speaking with the Purdue Newsroom. ?Our technique uses the neural network to understand what you are seeing.?

Predictive models was first created by the researchers to train their Convolutional Neural Network. Those models were then employed to help decipher the fMRI data collected from the study subjects and ?recreate? the videos.

According to Ph. D. student Haiguang Wen, who is the lead author on a recent paper in the journal Cerebral Cortex outlining the findings, this work represents a monumental leap forward in understanding how our vision is processed across the brain.

?Neuroscience is trying to map which parts of the brain are responsible for specific functionality,? Wen said. ?This is a landmark goal of neuroscience. I think what we report in this paper moves us closer to achieving that goal. A scene with a car moving in front of a building is dissected into pieces of information by the brain: one location in the brain may represent the car; another location may represent the building. Using our technique, you may visualize the specific information represented by any brain location, and screen through all the locations in the brain?s visual cortex. By doing that, you can see how the brain divides a visual scene into pieces, and re-assembles the pieces into a full understanding of the visual scene.?

The team?s research was made possible through funding from the National Institute of Mental Health and was conducted by the Weldon School of Biomedical Engineering and School of Electrical and Computer Engineering?s Laboratory of Integrated Brain Imagining in affiliation with the Purdue Institute for Integrative Neuroscience. For more information visit the link above. LIBI?s findings can be accessed here.

See what's coming up live on B1G+ every day of the season at BigTenPlus.com.

See what's coming up live on B1G+ every day of the season at BigTenPlus.com.